Blog Statistics, Part 2

In a recent post, I talked about how I tracked my posts views over time using Grafana and InfluxDB.

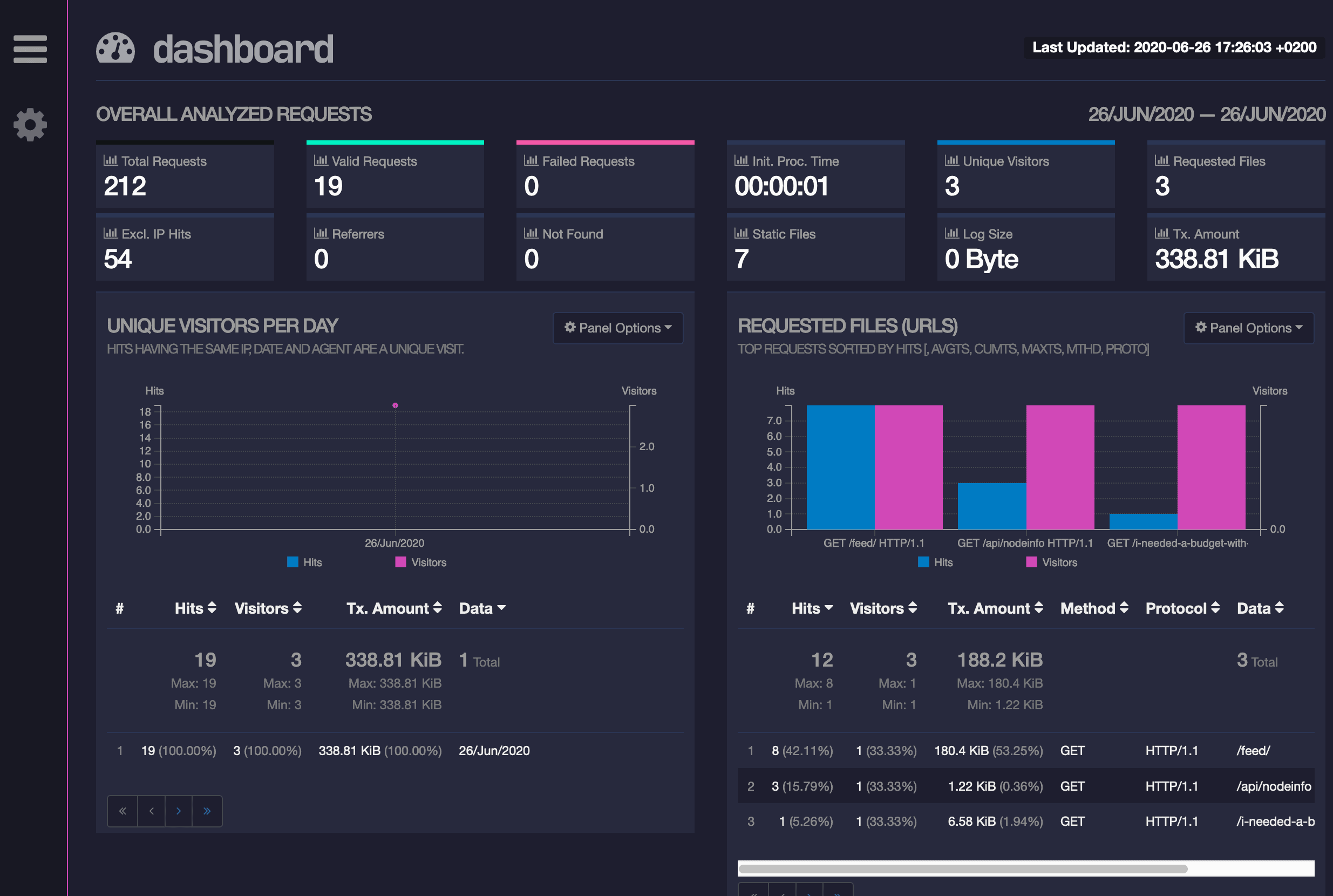

Today, I went another way and configured GoAccess to provide me with an HTML report with data extracted from my webserver's logs.

Challenge #100DaysToOffload No. 14

As I said, GoAccess works by parsing the logs from my webserver and extracting data from there. There's a surprising amount of data hidden there, here's an example line:

148.251.134.157 - - [26/Jun/2020:16:28:58 +0200] "POST /api/collections/gaugendre/inbox HTTP/1.1" 200 0 "-" "http.rb/4.4.1 (Mastodon/3.1.4; +https://mastodon.social/)"

148.251.134.157: the IP address of the client making the request- -: two dashes for the logged in user's identity. I don't use this features, hence the dashes.[26/Jun/2020:16:28:58 +0200]: date and time with timezonePOST /api/collections/gaugendre/inbox HTTP/1.1: HTTP method with path and protocol version200: The HTTP status code, 200 means "Ok"0: The size of the response, in bytes"-": The referrer (what page the user was on before)"http.rb/4.4.1 (Mastodon/3.1.4; +https://mastodon.social/)": The user agent. It describes the browser or the program used to make the request. Here it's a Mastodon server.

Every single request to the server is logged, so it makes it easy to determine what page is being most requested, and most importantly by who. Do my 100 views come from an indexing bot or from 100 different people? That's not the same thing! While the simple count from WriteFreely can't answer these questions, the logs can!

I might take it a step further and dump everything to an Elastic stack someday, but in the meantime you can access the reports generated by GoAccess for my blog at https://reports.augendre.info (now dead). I'm currently facing some issues that I don't yet understand with generating the reports regularly via crontab, but I'm on it :)

Update 2020-06-26 18:07: The crontab issue is resolved, reports are now updated automatically every 5 minutes.