Context-induced performance bottleneck in Go

Misused go contexts can lead to severe performance issues.

🏞️ Setting up the… context 🥁

The documentation for the context package states that they carry "deadlines, cancellation signals, and other request-scoped values across API boundaries and between processes."

At OVHcloud, we use a logging library which uses contexts to add structured metadata to log messages. One obvious example is adding a request ID to all logs emitted from the scope of a single request.

We noticed slowness in a message processing microservice and tracked it down to… the context! We were looping through the messages and on each iteration we set a new ID on the context to help group logs by message, like this:

func processMessages(ctx context.Context, messages chan Message) {

for msg := range messages {

ctx = context.WithValue(ctx, "msgID", newMsgID())

// do something with the message, including logging

}

}🕵🏻♂️ Understanding (and fixing) the issue

The issue boils down to the implementation of the context.WithValue and Context.Value in Go. context.WithValue wraps the parent context in a new one containing the desired value. So when "adding" two values to an empty context, you're in fact ending up with nested contexts:

In order to extract the value for the foo key, you can use ctx.Value("foo"). It will traverse all the nested contexts, checking if the current one holds a value for the requested key. When it finds one, it stops and returns the value.

Now what happens when processing lots of messages, like in the example above? Well… something like this:

For each loop iteration, a new context is created to wrap the previous ones because we didn't shadow the variable in the loop.

The fix is very easy: use := instead of = to shadow the outer context:

func processMessages(ctx context.Context, messages chan Message) {

for msg := range bus.Messages() {

// notice the `:=` instead of `=` below

ctx := context.WithValue(ctx, "msgID", newMsgID())

// ...

}

}golangci-lint since v1.58.0. I also published a follow-up article to document the process.📈 Measurements

The performance delta is staggering. What follows is a snippet that outputs the time each loop iteration takes to retrieve the value from either a shadowed context, or a "fat" context.

package main

import (

"context"

"fmt"

"time"

"github.com/gofrs/uuid"

)

const key = "key"

func main() {

const times = 10_000

// Setup the value we want to retrieve in each iteration

ctx := context.WithValue(context.Background(), key, "some-val")

fat(ctx, times)

shadow(ctx, times)

}

func fat(ctx context.Context, times uint64) {

for range times {

// wrap the context, each iteration makes it bigger

ctx = contextWithRandom(ctx)

start := time.Now()

// simulate the logging lib which retrieves context values

_ = ctx.Value(key)

fmt.Printf("fat,%v\n", time.Since(start).Nanoseconds())

}

}

func shadow(ctx context.Context, times uint64) {

for range times {

// shadow the context, each iteration creates a new one and it doesn't grow

ctx := contextWithRandom(ctx)

start := time.Now()

_ = ctx.Value(key)

fmt.Printf("shadow,%v\n", time.Since(start).Nanoseconds())

}

}

func contextWithRandom(ctx context.Context) context.Context {

return context.WithValue(ctx, "other_key", uuid.Must(uuid.NewV4()))

}

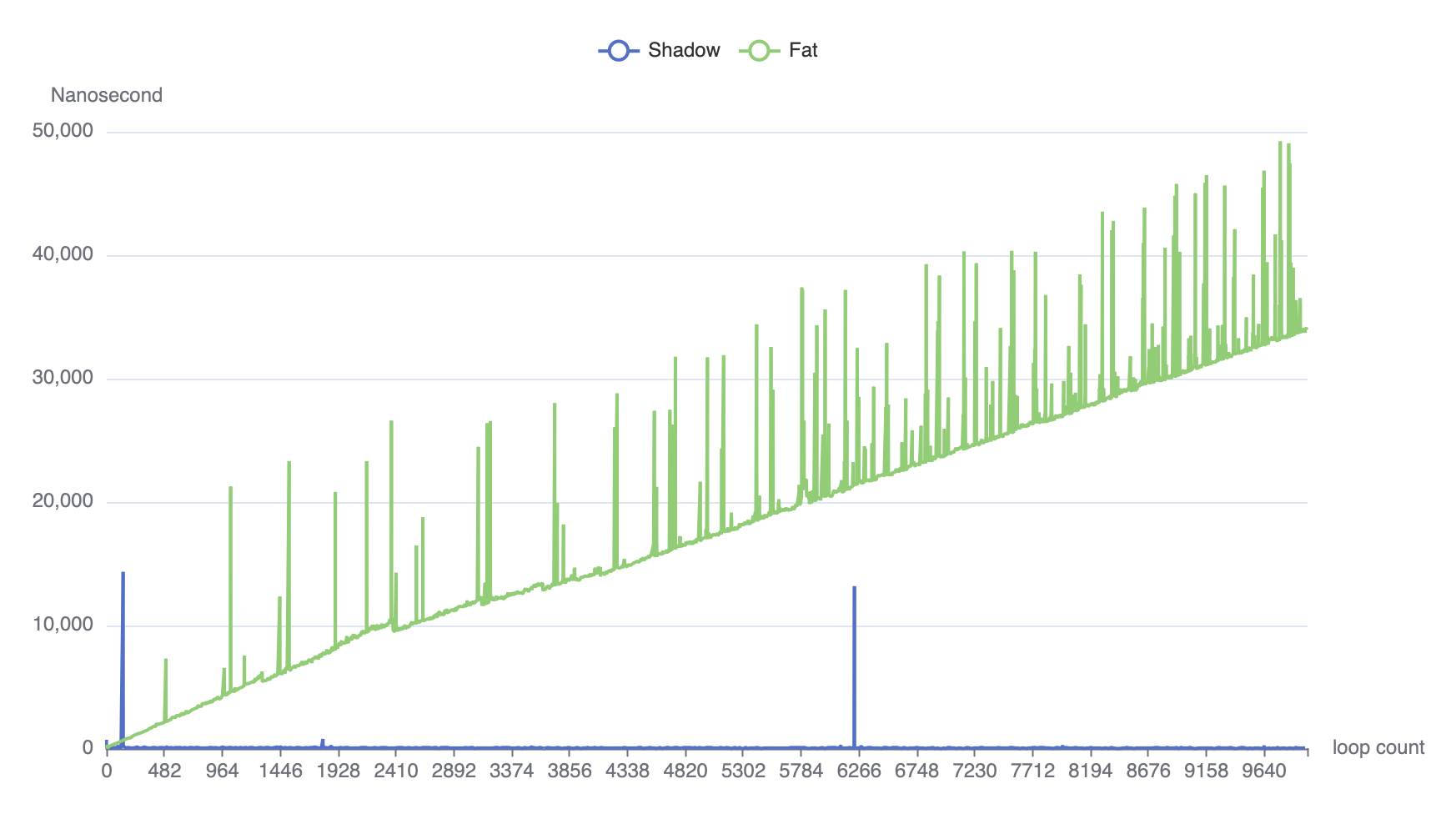

Here's a graph plotting the data output by the previous program. After just 2400 iterations, the ctx.Value call in the fat version already takes 10 µs per value. If your logging library retrieves 10 values, that's 0.1 ms spent just retrieving values to emit a single log line. And since it grows proportionally to the number of wrapped contexts, the slowdown increases over time, making the service sluggish after just a few hours.

I don't see any case where we'd want to use a context value after a loop iteration – and in fact in most cases we don't want this, because of the logging library which would then leak some values after the loop – so as a rule of thumb I now always shadow my contexts inside loops.

Here's a benchmark showing similar results in a more textual way.

package main_test

import (

"context"

"fmt"

"math/rand/v2"

"testing"

)

const key = "key"

func BenchmarkContext(b *testing.B) {

benchmarks := []struct {

times uint64

}{

{1_000},

{10_000},

{100_000},

}

for _, bm := range benchmarks {

ctx := context.WithValue(context.Background(), key, "some value")

b.Run(fmt.Sprintf("shadow %v", bm.times), func(b *testing.B) {

for i := 0; i < b.N; i++ {

shadow(ctx, bm.times)

}

})

b.Run(fmt.Sprintf("fat %v", bm.times), func(b *testing.B) {

for i := 0; i < b.N; i++ {

fat(ctx, bm.times)

}

})

}

}

func shadow(ctx context.Context, times uint64) {

for range times {

ctx := contextWithRandom(ctx)

_ = ctx.Value(key)

}

}

func fat(ctx context.Context, times uint64) {

for range times {

ctx = contextWithRandom(ctx)

_ = ctx.Value(key)

}

}

func contextWithRandom(ctx context.Context) context.Context {

return context.WithValue(ctx, "other_key", rand.Int64())

}

The results also show the discrepancy in growth rates between the shadow and fat implementations.

$ go test -bench=.

goos: darwin

goarch: arm64

pkg: github.com/Crocmagnon/fat-contexts

BenchmarkContext/shadow_10-8 2261456 763.0 ns/op

BenchmarkContext/fat_10-8 1662235 743.6 ns/op

BenchmarkContext/shadow_100-8 236544 4888 ns/op

BenchmarkContext/fat_100-8 53778 22275 ns/op

BenchmarkContext/shadow_1000-8 24499 48474 ns/op

BenchmarkContext/fat_1000-8 711 1698109 ns/op

BenchmarkContext/shadow_10000-8 2472 489804 ns/op

BenchmarkContext/fat_10000-8 6 170819118 ns/op

BenchmarkContext/shadow_100000-8 248 4938549 ns/op

BenchmarkContext/fat_100000-8 1 17150788208 ns/op

PASS

ok github.com/Crocmagnon/fat-contexts 31.454sTo learn more about Go benchmarks output format, head over to the documentation. There are a couple of interesting things to note here:

- the fat implementation is slower even at small sample sizes ;

- when multiplying the loop count by 10, the shadow implementation is 10 times slower as expected but the fat one is 100 (=10²) times slower.

This last point can be explained by the fact that retrieving the value is a constant-time operation (O(1)) in the shadow variant and proportional to the loop size (O(n)) in the fat variant, which is what we observed in the chart above.

If you want to reproduce my results, the code is available on GitHub:

Thanks for reading!